Introduction to Build Contexts in MirageOS 4

By Lucas Pluvinage - 2022-03-30

In this blog post, we'll discover build contexts, one of the central changes of MirageOS 4. It's a feature from the Dune build system that enables fully-customizable cross-compilation. We'll showcase its usage by cross-compiling a unikernel to deploy it on an arm64 Linux machine using KVM. This way, a powerful machine does the heavy lifting while a more constrained device such as a Raspberry Pi or a DevTerm deploys it.

We recommend having some familiarity with the MirageOS project in order to fully understand this article. See mirageos.org/docs for more information on that matter.

The unikernel we'll deploy is a caching DNS resolver: https://github.com/mirage/dns-resolver. In a network configuration, the DNS resolver translates domain names to IP adresses, so a personal computer knows which IP should be contacted while accessing mirageos.org. See the first 10 minutes of this YouTube video for a more precise introduction to DNS.

It's common that your ISP provides a default DNS resolver that's automatically set up when connecting to your network (see DHCP), but this may come with privacy issues. The Internet People™ recommend using 1.1.1.1 (Cloudflare) or 8.8.8.8 (Google), but a better solution is to self-host your resolver or use one set up by someone you trust.

The MirageOS 4 Build System

Preliminary Steps

Let's start by setting up MirageOS 4, fetching the project, and configuring it for hvt. hvt is a Solo5-based target that exploits KVM to perform virtualization.

$ opam install "mirage>4" "dune>=3.2.0"

$ git clone https://github.com/mirage/dns-resolver

$ cd dns-resolver

dns-resolver $ mirage configure -t hvt

What is a Configured Unikernel ?

In MirageOS 4, a configured unikernel is obtained by running the mirage configure command in a folder where a config.ml file resides. This file describes the requirements to build the application, usually a unikernel.ml file.

The following hierarchy is obtained. It's quite complex, but today the focus is on the Dune-related part of it:

dns-resolver/

┣ config.ml

┣ unikernel.ml

┃

┣ Makefile

┣ dune <- switch between config and build

┣ dune.config <- configuration build rules

┣ dune.build <- unikernel build rules

┣ dune-project <- dune project definition

┣ dune-workspace <- build contexts definition

┣ mirage/

┃ ┣ context

┃ ┣ key_gen.ml

┃ ┣ main.ml

┃ ┣ <...>-<target>-monorepo.opam

┃ ┗ <...>-<target>-switch.opam

┗ dist/

┗ dune <- rules to produce artifacts

To set up the switch state and fetch dependencies, use the make depends command. Under the hood (see the Makefile), this calls opam and opam-monorepo to gather dependencies. When the command succeeds, a duniverse/ folder is created, which contains the unikernel's runtime dependencies.

$ make depends

While obtaining dependencies, let's start to investigate the Dune-related files.

dune Files

./dune

dune files describe build rules and high-level operations so that the build system can obtain a global dependency graph and know about what's available to build. See dune-files for more information.

In our case, we'll use this file as a switch between two states. This one's first:

(include dune.config)

at the configuration stage (after calling mirage configure).

Then the content is replaced by (include dune.build) if the configuration is successful.

./dune.config

(data_only_dirs duniverse)

(executable

(name config)

(flags (:standard -warn-error -A))

(modules config)

(libraries mirage))

Here, two things are happening. First, the duniverse/ folder is declared as data-only, because we don't want it to interfere with the configuration build, as it should only depend on the global switch state.

Second, a config executable is declared. It contains the second stage of the configuration process, which is executed to generate dune.build, dune-workspace, and various other files required to build the unikernel.

./dune-workspace

The workspace declaration file is a single file at a Dune project's root and describes global settings for the project. See the documentation.

First, it declares the Dune language used and the compilation profile, which is release.

(lang dune 2.0)

(profile release)

For cross-compilation to work, two contexts are declared.

The host context simply imports the configuration from the Opam switch:

(context (default))

We use the target context in a more flexible way, and there are many fields allowing users to customize settings such as:

- OCaml compilation and linking flags

- C compilation and linking flags

- Dynamic linking

- OCaml compiler toolchain: any compiler toolchain described by a

findlib.conffile in the switch can be used by Dune in a build context. See https://linux.die.net/man/5/findlib.conf for more details on how to write such a file. An important fact about the compiler toolchain is that Dune derives the C compilation rules from the configuration, as described inocamlc -config.

(context (default

(name solo5) ; name of the context

(host default) ; inform dune that this is cross-compilation

(toolchain solo5) ; use the ocaml-solo5 compiler toolchain

(merlin) ; enable merlin for this context

(disable_dynamically_linked_foreign_archives true)

))

./dune.build

When configuration is done, this file is included by ./dune.

- The generated source code is imported along with the unikernel sources:

(copy_files ./mirage/*)

- An executable is declared within the cross-compilation build context, using the statically-known list of dependencies:

(executable

(enabled_if (= %{context_name} "solo5"))

(name main)

(modes (native exe))

(libraries arp.mirage dns dns-mirage dns-resolver.mirage dns-server

ethernet logs lwt mirage-bootvar-solo5 mirage-clock-solo5

mirage-crypto-rng-mirage mirage-logs mirage-net-solo5 mirage-random

mirage-runtime mirage-solo5 mirage-time tcpip.icmpv4 tcpip.ipv4

tcpip.ipv6 tcpip.stack-direct tcpip.tcp tcpip.udp)

(link_flags :standard -w -70 -color always -cclib "-z solo5-abi=hvt")

(modules (:standard \\ config manifest))

(foreign_stubs (language c) (names manifest))

)

- Solo5 requires the usage of a small chunk of C code derived from a manifest file, which is also generated:

(rule

(targets manifest.c)

(deps manifest.json)

(action

(run solo5-elftool gen-manifest manifest.json manifest.c)))

- The obtained image is renamed, and the default alias is overriden so that

dune buildworks as expected:

(rule

(target resolver.hvt)

(enabled_if (= %{context_name} "solo5"))

(deps main.exe)

(action

(copy main.exe %{target})))

(alias

(name default)

(enabled_if (= %{context_name} "solo5"))

(deps (alias_rec all)))

./dist/dune

Once the unikernel is built, this rule describes how it's promoted back into the source tree that resides inside the dist/ folder.

(rule

(mode (promote (until-clean)))

(target resolver.hvt)

(enabled_if (= %{context_name} "solo5"))

(action

(copy ../resolver.hvt %{target})))

Cross-Compiling to x86_64/hvt

If everything went correctly, the unikernel source tree should be populated with all the build rules and dependencies needed. It's just a matter of

$ make build

or

$ mirage build

or

$ dune build --root .

Finally, we obtain an hvt-enabled executable in the dist/ folder. To execute it, the we must first:

- install the HVT tender:

solo5-hvtthat is installed in thesolo5package. - prepare a TAP interface for networking: note that it requires access to the Internet to be able to query the root DNS servers.

That executable can run using solo5-hvt --net:service=<TAP_INTERFACE> dist/resolver.hvt --ipv4=<UNIKERNEL_IP> --ipv4-gateway=<HOST_IP>.

Cross-Compiling to ARM64/HVT

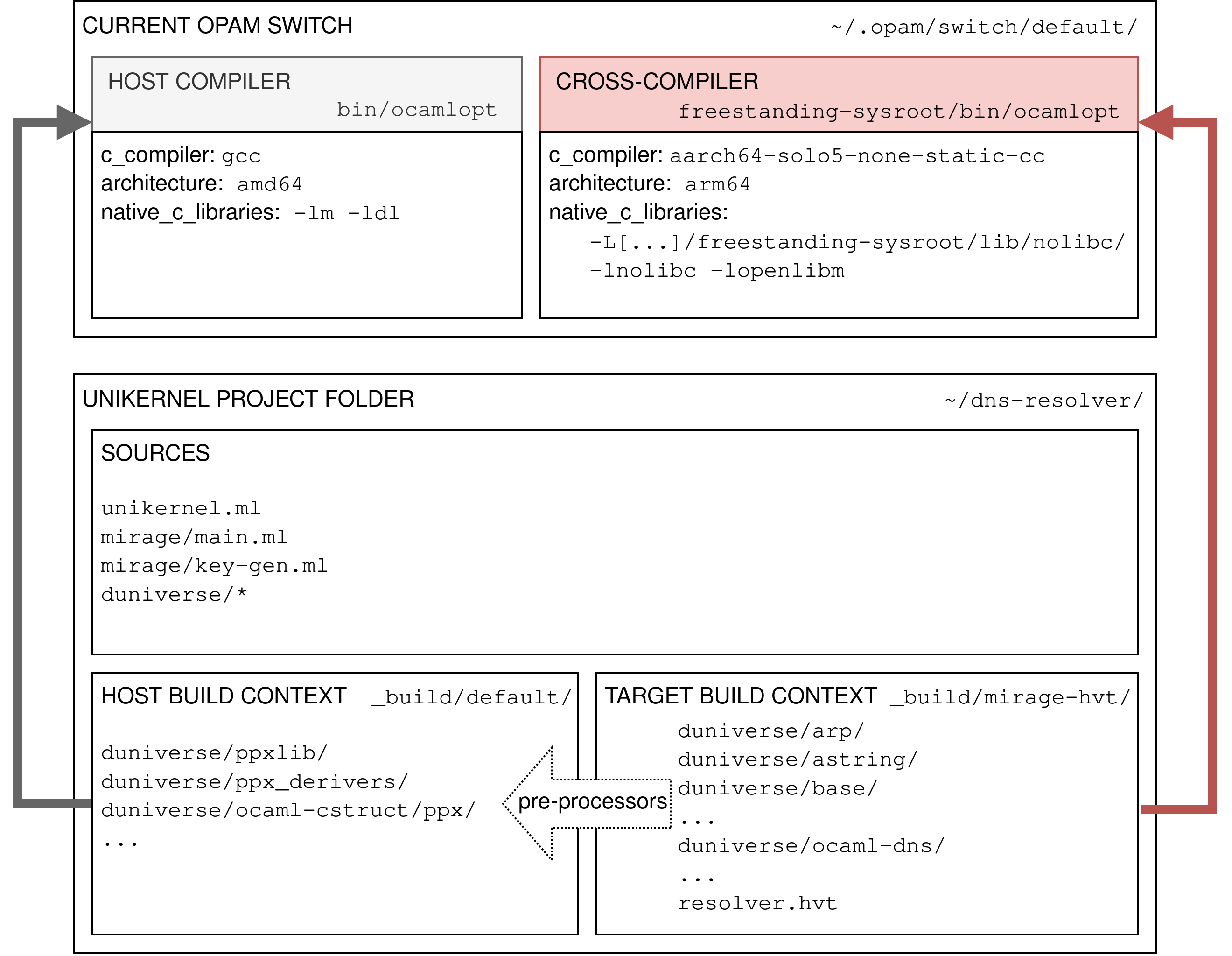

When cross-compiling to ARM64, the scheme looks like this:

So, from the Mirage build system viewpoint, nothing changes. The only part that changes is the compiler used. We switch from a host-architecture ocaml-solo5 to a cross-architecture version of ocaml-solo5.

To achieve that, we must pin a version of ocaml-solo5 configured for cross-compilation and pin the cross-compiled Solo5 distribution:

$ opam pin solo5-cross-aarch64 https://github.com/Solo5/solo5.git#v0.7.1

$ opam pin ocaml-solo5-cross-aarch64 https://github.com/mirage/ocaml-solo5.git#v0.8.0

Note that doing this will uninstall ocaml-solo5. Indeed, they both define the same toolchain name solo5.

KVM is now enabled by default in most Raspberry Pi kernel distributions, but for historical interest, this blog post shows how to enable KVM and cross-compile the Linux kernel: https://mirageos.org/docs/arm64

Then, simply run

$ dune build / mirage build / make

A cross-compiled binary will appear in the dist/ folder:

$ file dist/resolver.hvt

dist/resolver.hvt: ELF 64-bit LSB executable, ARM aarch64, version 1 (SYSV), statically linked, interpreter /nonexistent/solo5/, for OpenBSD, with debug_info, not stripped

On the Raspberry Pi target, simply copy the unikernel binary, install the Solo5 tender (opam install solo5), and run solo5-hvt unikernel.hvt to execute the unikernel.

Compiling to a New Target or Architecture

Case 1: An Already Known Mirage Target (Unix / HVT / etc.)

In that situation, mirage configure -t <target> should already output the correct source code and dependencies for the target. This is notably under the assumption that the involved C code is portable.

The dune-workspace can then be tweaked to reference the wanted cross-compiler distribution. ocaml-solo5 is an example on how a cross-compiler distribution can be set up and installed inside an Opam switch.

Case 2: A New Target

In this situation, a more in-depth comprehension of Mirage is required.

- Set up a cross-compiler distribution: see previous case.

- Implement a base layer:

An OCaml module named

<Target>_osis required to implement the base features of MirageOS, namely job scheduling and timers. Seemirage-solo5. - Implement the target signature in the Mirage tool:

Mirage_target.Snotably describes the packages required and the Dune rules needed to build for that target. - To obtain feature parity with the other Mirage targets and be able to use the existing devices, device drivers should be implemented:

- Networking: see mirage-net-solo5

- Console: see mirage-console-solo5

- Block Device: see mirage-block-solo5

- Clock: see mirage-clock-solo5

Conclusion

This blog post shows how the Mirage tool acts as super glue between the build system, the mirage libraries, the host system, and the application code. One of the major changes with MirageOS 4 is the switch from OCamlbuild to Dune.

Using Dune to build unikernels enables cross-compilation through build contexts that use various toolchains. It also enables the usage of the Merlin tool to provide IDE features when writing the application. Finally, a single-workspace containg all the unikernels' code lets developers investigate and edit code anywhere in the stack, allowing for fast iterations when debugging libraries and improving APIs.